Introduction: Why Universal Context Management?

In the rapidly evolving world of Artificial Intelligence, the focus is shifting from simple, single-turn prompts to sophisticated, multi-step AI agents. These agents are designed to perform complex, real-world tasks—but they have a fundamental weakness: a lack of persistent, unified memory.

Recent trends in agentic AI highlight the challenge of memory management. Large Language Models (LLMs) are essentially stateless; they forget everything after each conversation. While basic systems address this with simple chat history, true agentic utility requires a system that can access, understand, and use information fragmented across all the tools we use—from design files in Figma to notes in Notion and code in Claude. The current siloed nature of this data is the bottleneck.

The Intent: Simple, Fast, and Organized Context

The goal of this project is to create a lightweight Universal Context Holder that acts as the agent’s centralized, user-owned memory hub.

- Gather and Manage Fragmented Context: We aim to consolidate the critical information scattered across disparate applications (Figma, Notion, source code repositories, etc.) into one accessible, organized store.

- A Focus on Efficiency: The system is designed to enable simple, fast, and organized management of information. By providing a clean, curated context stream, we bypass the need for agents to process massive, noisy, and costly context windows, leading to faster inference and lower operational costs.

Why MCP?

The Model Context Protocol (MCP) is the universal language for connecting AI agents to external systems and tools. It’s the “USB-C” for the AI ecosystem, and it’s the ideal foundation for this project.

- Easy Portability from HTTP Requests: Since MCP builds on standards like JSON-RPC and often uses HTTP transport, it offers a developer-friendly path to port existing HTTP-based API logic and data services into the agent ecosystem.

- Ease of Model Communication: MCP provides a standardized way for models to discover and interact with external tools and data. By adopting this protocol, we ensure that any MCP-compatible agent (from different vendors like Anthropic, OpenAI, or Google) can seamlessly integrate with our context holder. It has recently been included in the Linux Foundation as well. (Reference: https://www.linuxfoundation.org/press/linux-foundation-announces-the-formation-of-the-agentic-ai-foundation)

- Developer Friendly: The protocol simplifies the complex N x M integration problem (where every model needs a custom connector for every tool). A single MCP implementation unlocks an entire ecosystem of agent clients.

FastMCP reference: https://gofastmcp.com/getting-started/welcome

Project Structure Overview

Repository: https://github.com/zealotjin/unicon

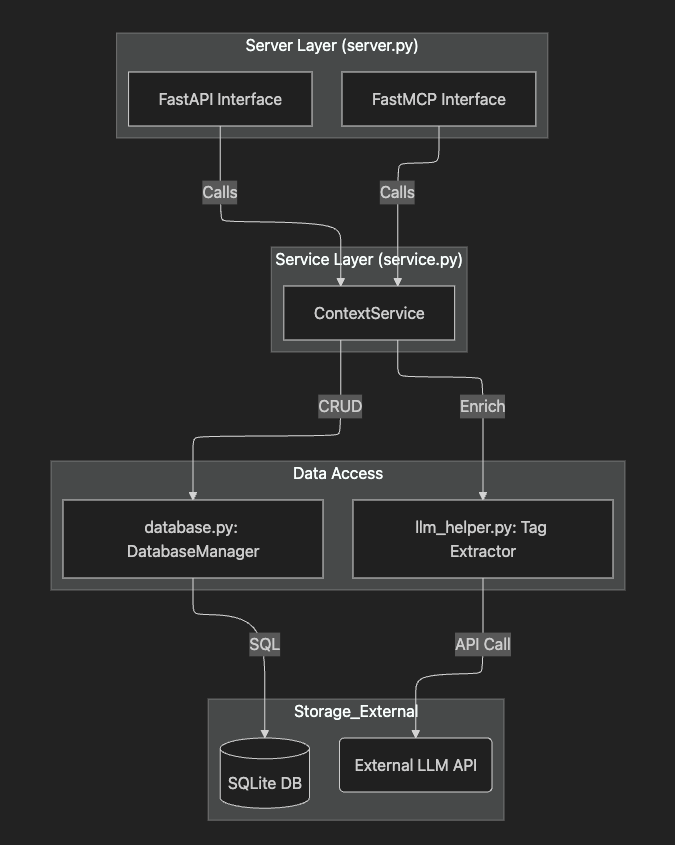

The current repository is still under active development, so the structure of the application is as follows:

Later on, I will focus more on the details of memory management, but for the time being, I intend to use tag-based search across the contexts that have been provided to ensure fast, relevant retrieval.

Development Tools: Antigravity and Gemini

I’m currently using Antigravity and Gemini models for the development process, and they have significantly shaped how the project is being built.

What I Like: AI Planning and Review

The ability to have an explicit planning phase—where the model proposes a detailed plan for an implementation task, and I can review, comment on, and refine that plan before a single line of code is executed—has been invaluable. This approach dramatically reduces the risk of incorrect execution and ensures the AI’s logic aligns with the project’s architectural goals.

Improvement Focus: FastMCP OpenAPI Integration

While the Gemini models are powerful, I noticed that simple prompts to guide them through complex external API documentation were sometimes insufficient for robust tool use. Or maybe this documentation was not part of the knowledge cutoff, hence maybe providing or asking for the latest updated documentation would have helped. (Reference: https://gofastmcp.com/servers/server#openapi-integration)

Leave a comment