Building a service using Large Language Models (LLMs) takes more than just writing good prompts. While “prompt engineering” has gained significant attention since the rise of LLMs, crafting prompts that consistently yield high-quality results remains a challenge. The model’s inherent performance can guarantee certain outputs, but achieving precise, controlled responses that meet business needs is still tricky. Moreover, the unpredictable nature of generative AI makes it difficult to build a service that users are willing to pay for.

Understanding the Unpredictability of LLMs

LLMs, by design, generate responses probabilistically, meaning the same prompt may yield different outputs. Although techniques like setting the temperature or providing seed values can help moderate randomness, complete control is difficult due to the diverse nature of inputs. This unpredictability becomes a major challenge when developing a service that requires structured, repeatable outputs.

References:

Crafting an Effective Prompt

The first step in prompt engineering is, of course, writing a well-structured prompt. There are numerous resources available online that explain what makes a good prompt, and a great starting point is OpenAI’s official guide on prompt engineering. However, don’t rely solely on manual prompt creation—there are tools available, such as prompt generators, enhancers, or templates, that help generate multiple prompt variations for testing.

References:

- OpenAI Prompt Engineering Best Practices

- OpenAI Prompt Examples

- Anthropic Prompt Engineering

- Anthropic Prompt Generator

Based on practical experience, here are two fundamental techniques that significantly improve prompt effectiveness:

1. Provide Examples for Structured Output

Including examples in your prompt significantly increases the likelihood of receiving responses in a specific format. If you require structured outputs such as JSON or Markdown, explicitly showing the expected format in the prompt helps guide the model’s response.

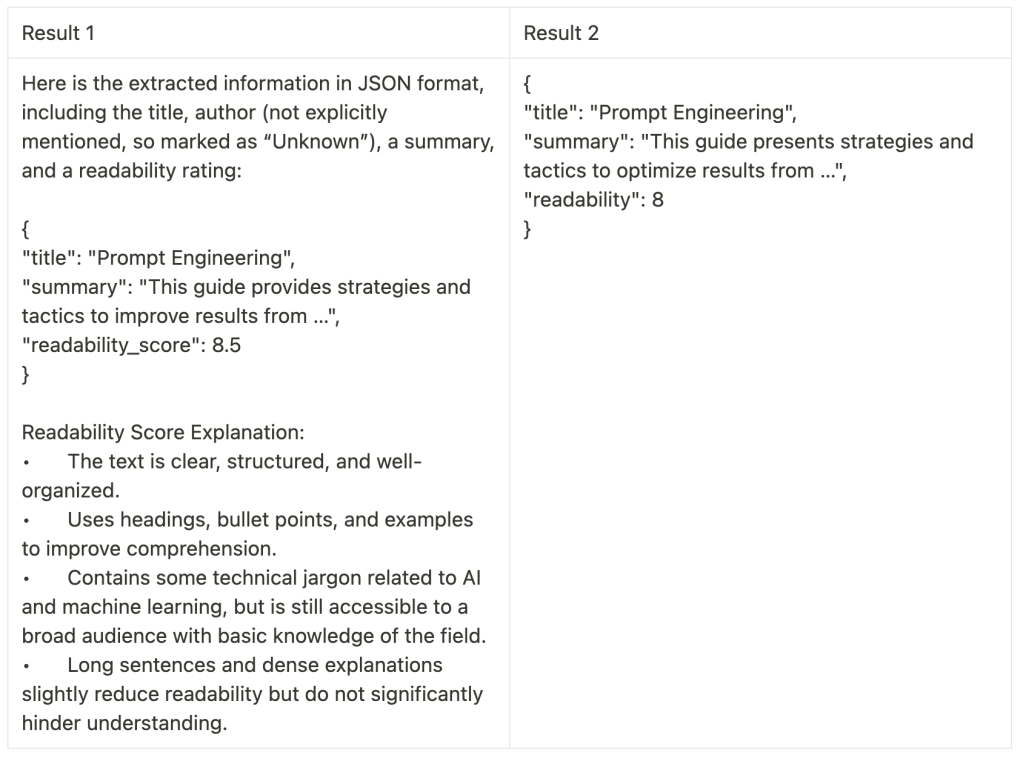

Instead of (example 1):

Extract the title, summary and rate the quality of readability from 0~10.

Use (example 2):

Extract the title, summary and rate the quality of readability from 0~10.

Example:

```

{ "title": "This is the title of the user's text", "summary": "This is the summary of the user's text", "readability": 6 }

```

This makes it easier to parse results programmatically and ensures consistency across responses.

Additionally, OpenAI’s latest models, such as GPT-4o-mini and GPT-4o, support structured outputs where JSON format is guaranteed. Leveraging these capabilities ensures a reliable and easy-to-process response structure.

Reference:

2. Define Clear Rules in Your System Message

When designing a service, you want your responses to follow rules like “No code blocks in the text,” “Do not use inappropriate words in your response,” or “Always respond in English.” These rules are best enforced when defined in the system message rather than other message types.

You can define only one system message for each conversation, and this dictates the behavior of the LLM. The instructions in this message take precedence over user messages, and using this effectively will protect your service from prompt hijacking or malicious usage of your API.

Task Decomposition vs. Chain of Thought

Many prompt engineering guides discuss the concept of Chain of Thought (CoT), which encourages breaking down complex tasks into intermediate reasoning steps. However, in practice, I have found that splitting tasks into smaller, individual sub-tasks yields more reliable results than attempting to get the model to process multiple steps in one go.

For example, instead of prompting:

Summarize this article, extract the key points, and categorize the sentiment.

You can break it into:

- Summarization Task: “Summarize this article in three sentences.”

- Key Point Extraction: “List the top three key points from the summary.”

- Sentiment Analysis: “Analyze the sentiment of the article and classify it as positive, negative, or neutral.”

This step-by-step approach often improves accuracy and reduces inconsistencies in responses. It also aligns with how humans process complex problems—by tackling them in smaller, manageable parts.

Conclusion

Building an LLM-powered service that people are willing to pay for requires more than just writing good prompts. The key challenges lie in managing unpredictability, enforcing structured outputs, and breaking down tasks into manageable steps. In the next post, I’ll explore how to chain multiple prompts effectively to build more robust and scalable AI-driven services.

Leave a comment