The unsung hero: the Spark Driver

There are various ways of increasing the processing speed when using Spark. Some may be as simple as increasing the number of executors while some may require more complicated tweaking to optimize the SQL query plan. There are great references and explanations for different methods on the web, however, I would like to share another simple factor that can be overlooked. Mainly, changing the configuration for the driver may give a significant boost in data processing; as for my personal experience, I was able to complete the job 3 times faster via this modification. The role of the Spark driver may be neglected and often the simplest changes may result to result in great performance boosts.

Driver configurations to tweak

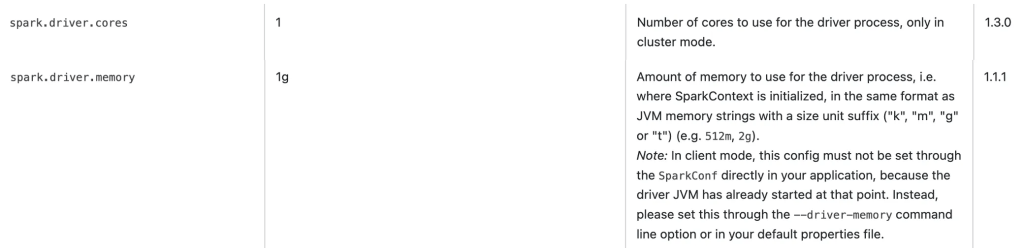

The main driver configurations to consider are the number of cores (spark.driver.cores) and the memory for the driver (spark.driver.memory). The default values for these configurations can be found below:

What does a driver do?

The Spark driver’s main job is to initiate the main function, create the Spark Context and determine the execution plan for the DAG of the job. In addition, the driver schedules tasks based on the availability of executors and manages the data that is stored in each executor. The driver has a crucial role in coordinating task execution, and naturally, as more tasks are run simultaneously, the work the driver has to do increases. In this case, if enough resources are not allocated to the driver to match the increase of parallel tasks, then the driver can become the bottleneck. Therefore, increasing the number of threads for the driver will allow faster processing for the coordination between the tasks/executors.

Personal experience

The situation where I benefited the most was when I wrote Spark scripts that extracted embeddings(features) from machine learning models. The Spark job was divided such that at most 1500 tasks were being processed by multiple executors. In this situation, quadrupling the driver core and memory from its default tripled the speed of data being processed from the spark job.

This is not to say that just increasing the core count or the memory size for the driver will bring significant boosts in efficiency. Other factors like the number of tasks, the size of the partition, etc. need to be considered to find the right setting for the Spark job to run fast. But this article is a reminder that the role of a driver is also very important in running Spark jobs and that their configurations should also be considered when optimizing your Spark jobs.

References

Spark Optimizations

- https://sparkbyexamples.com/spark/spark-performance-tuning/

https://spark.apache.org/docs/latest/sql-performance-tuning.html - https://medium.com/expedia-group-tech/part-3-efficient-executor-configuration-for-apache-spark-b4602929262#:~:text=The%20consensus%20in%20most%20Spark,in%20terms%20of%20parallel%20processing.

Leave a comment